Introduction

The market for medical simulators is growing dramatically as an increase in technology is allowing these devices to come to life. Creating virtual environments for the medical industry can provide cost-effective training and the opportunity for repetitive learning. This project moves beyond surgical tools and instruments, and utilizes a haptic device to research the meaning of “touch” as a diagnosis. What lies under the skin is visually unknown and applying pressure to the area (and knowing what it should feel like) results in the initial diagnosis.What is a haptic device?

A haptic device is an electronic machine that creates a virtual three-dimensional environment allowing the operator to feel and touch virtual objects. The device can resist movement in specific locations so that the operator can feel where virtual objects have been placed. The device can mimic various feelings of touch from a slippery, malleable substance to one that is rock solid. This feeling, or touch, is also referred to as force-feedback from the system. Terms such as haptic device and cursor are interchangeable in this research paper.

Future Applications for Haptics

This

research focuses mainly on applications for haptics in the medical

industry, specifically in training and simulation environments.

Providing proper and realistic feedback in a virtual simulation can

teach an inexperienced doctor to become sensitive to the

significance of various levels of pressure. In collaboration with

complex graphical environments used for surgical simulations,

haptics can replicate the texture of organs, tissues, and bones, for

example. These simulations can also be extended to training

individuals in all levels of medical professions, including doctors,

nurses, medical technicians, and military medical units.

Functional Description

Phase 1

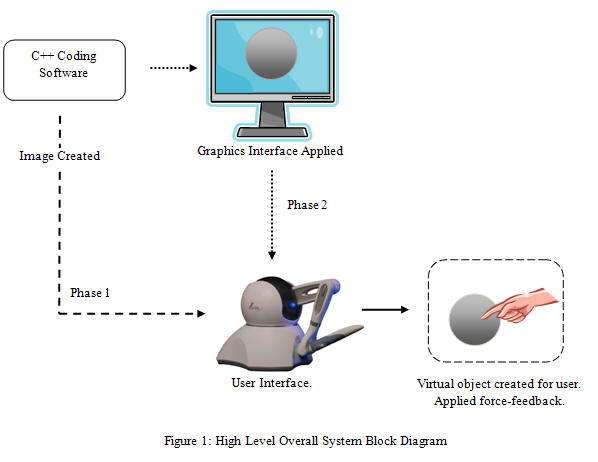

Referring to Figure 1 below, the first phase of

this project development will rely heavily on creating a virtual

environment with C++ programming. A virtual environment shall

include rendering the force-feedback on the system so that the user

can differentiate between hard and soft surfaces.

Phase one will not

incorporate a graphics interface; instead the virtual environment

will be felt, not seen, by the user.

Phase 2

The second phase

will involve attaching graphics and images to the objects created.

The software to create these images will be based on open source

imaging software called OpenGL. Importing three-dimensional images

to the system will also be considered at this stage.